A company with 500,000 employees needs to supply its employee list to an application used by human resources. Every 30 minutes, the data is exported using the LDAP service to load into a new Amazon DynamoDB table. The data model has a base table with Employee ID for the partition key and a global secondary index with Organization ID as the partition key.

While importing the data, a database specialist receives ProvisionedThroughputExceededException errors.

After increasing the provisioned write capacity units (WCUs) to 50,000, the specialist receives the same errors. Amazon CloudWatch metrics show a consumption of 1,500 WCUs.

What should the database specialist do to address the issue?

A. Change the data model to avoid hot partitions in the global secondary index.

B. Enable auto scaling for the table to automatically increase write capacity during bulk imports.

C. Modify the table to use on-demand capacity instead of provisioned capacity.

D. Increase the number of retries on the bulk loading application.

While importing the data, a database specialist receives ProvisionedThroughputExceededException errors.

After increasing the provisioned write capacity units (WCUs) to 50,000, the specialist receives the same errors. Amazon CloudWatch metrics show a consumption of 1,500 WCUs.

What should the database specialist do to address the issue?

A. Change the data model to avoid hot partitions in the global secondary index.

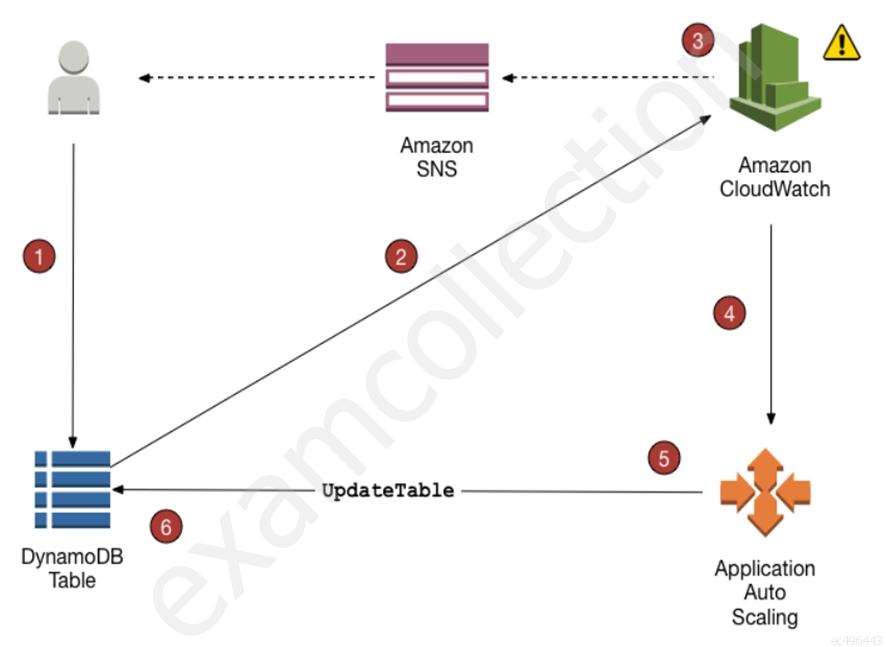

B. Enable auto scaling for the table to automatically increase write capacity during bulk imports.

C. Modify the table to use on-demand capacity instead of provisioned capacity.

D. Increase the number of retries on the bulk loading application.